An Introduction to Azure Stream Analytics Job

[toc]

Introduction

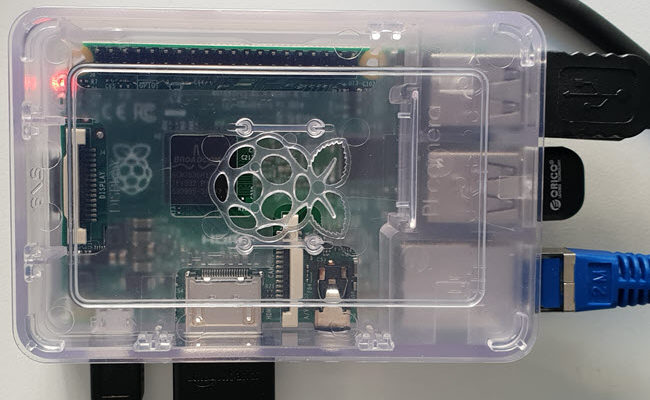

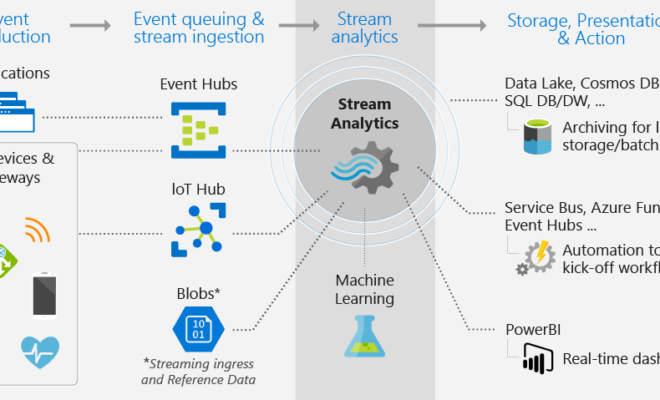

The capability of an Azure Stream Analytics Job is a lot, here in this post we are going to discuss a few of them. An Azure Stream Analytics is basically an engine which processes the events. These events are coming from the devices we have configured, it can be an Azure IoT Dev Kit (MXChip) or a Raspberry Pi and many more. The stream analytics job has two vital parts

- Input source

- Output source

The input source is the source of your streaming data, in my case, it is my IoT Hub. And the output source is the output what you are configuring. I had configured the output to save the data to an Azure SQL database. Let’s just stop the introduction part now and start creating our own Stream Analytics.

Background

I recently got my MXChip (Azure Iot Dev Kit) and I was surprised with the capabilities that device can do. It has a lot of sensors within the device, like temperature, humidity, pressure, magnetometer, security etc. Then I thought it is time to play with the same. So the basic idea here was to,

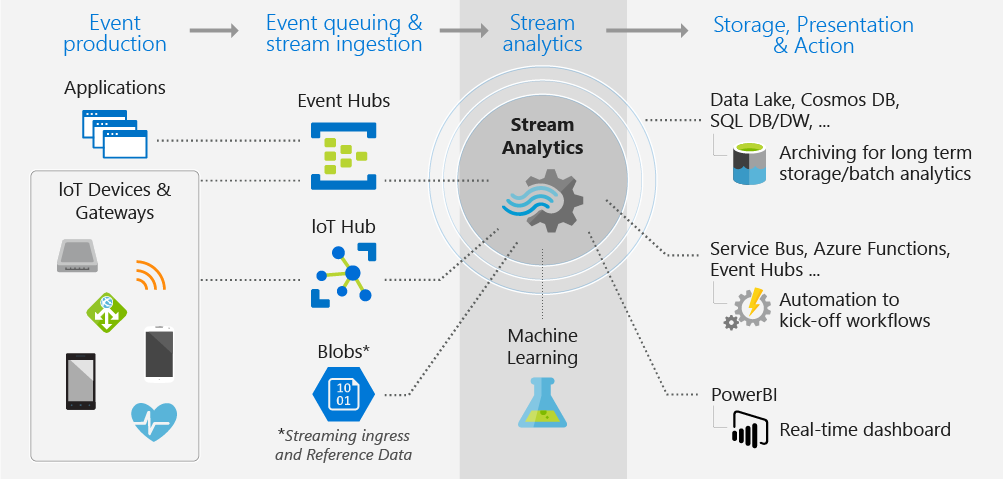

- Configure the device to send the data to the IoT Hub

- Select the IoT Hub as a stream input

- Send the output to an SQL Server database

In this article, we are going to concentrate on how to create a Stream Analytics Job and how you can configure the same to save the stream data to the SQL Server database.

Prerequisites

To do the wonderful things, we always need some prerequisites.

- Azure Subscription

- MXChip Azure IoT Dev Kit

- An active IoT Hubows Driver Kit (WDK) 10

- IoT Core ADK Add-Ons

- Windows 10 IoT Core Packages

- The Raspberry Pi BSP

- Custom FFU image we have created

Creating the Azure Stream Analytics Job

Login to your Azure Portal and click on the Create a resource, and then search for the “Stream Analytics job”.

Once you clicked on the Create button, it is time to specify the details of your job.

- Job Name

- Subscription

- Resource Group

- Location

- Hosting Environment

I would strongly recommend you to select the same resource group of your IoT Hub for the Stream Analytics Job as well so that you can easily delete the resources when there are not needed. Once the deployment is successful you can go to the resource overview and see the details.

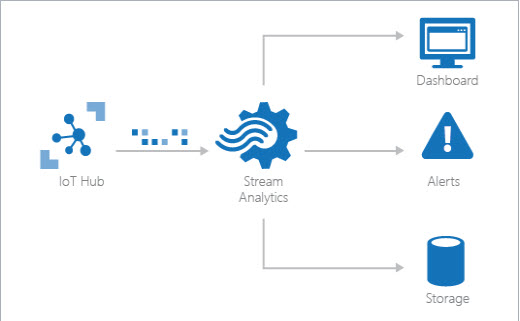

Configure Inputs

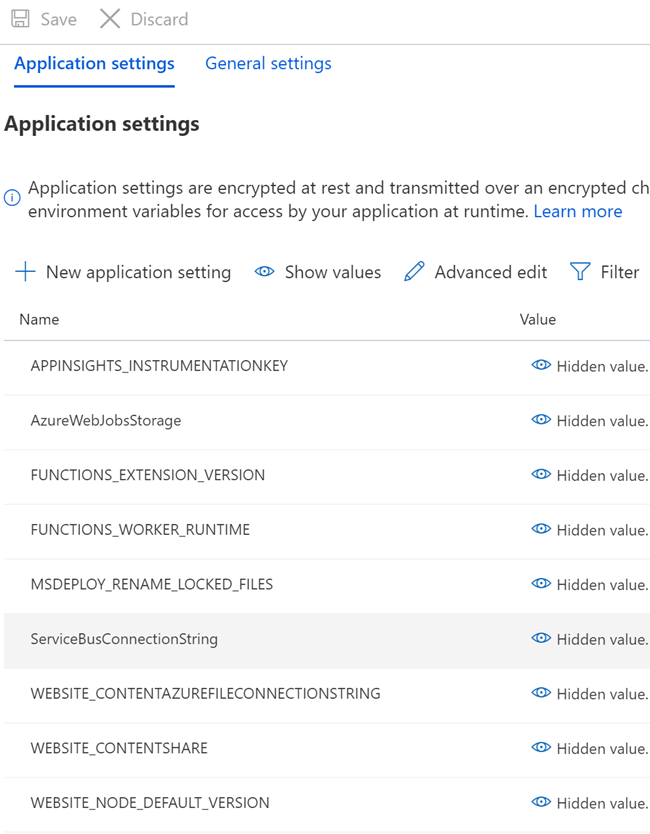

In the left menu, you can see a section called Job topology, that’s where we are going to work. Basically, we will be setting the Inputs and Outputs and then we will be writing a query which can take the inputs and send the values to the configured output. Click on the Inputs label and click on Add stream input and then select the IoT Hub.

In the next screen, you will have options to select the existing IoT hub and to create a new IoT Hub. As I have already created an IoT hub, I would select the existing one.

Please be noted that you are allowed to use special characters in the Input alias field, but if you use such, please make sure to include the same inside [] in the query, which we will be creating later.

About the special characters in Input alias field

Once you are successfully configured the Inputs, then we can go ahead and configure the outputs.

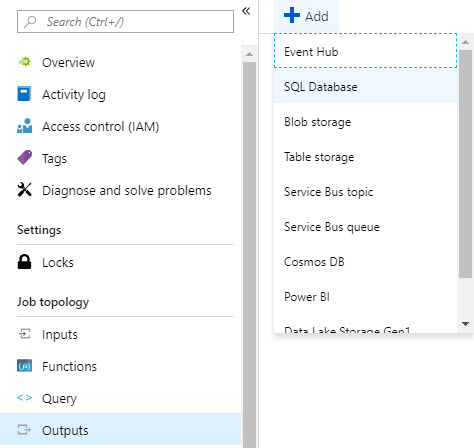

Configure Outputs

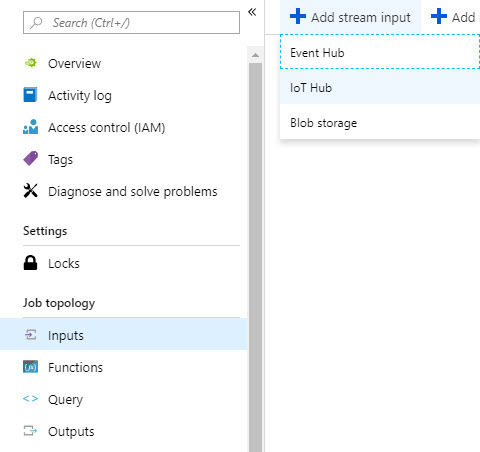

Click on the Outputs from the Job topology section and click Add, and then select

You can either create a new Database or select the one you had already created. I used the existing database and table.

Configure the Query

Once you click the label Query on the left pan, you will be given an editor where you can write your queries. I am using the below query.

SELECT

messageId,

deviceId,

temperature,

humidity,

pressure,

pointInfo,

IoTHub,

EventEnqueuedUtcTime,

EventProcessedUtcTime,

PartitionId

INTO

streamoutputs

FROM

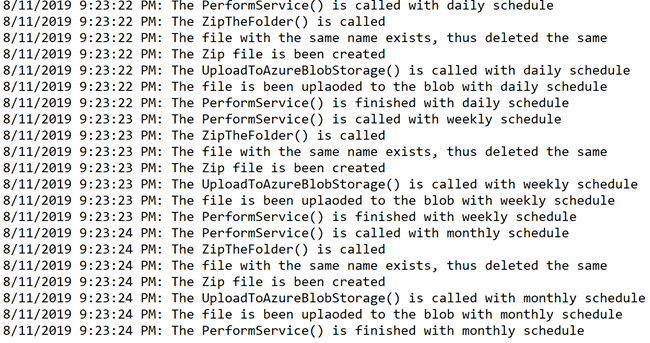

streaminputsAs you can see that I am just selecting the fields I may need and saving the same to our stream outputs. You can always select all the fields by using the select * query, but the problem with that is, you will have to set up the table columns in the same order of the stream data. Otherwise, you may get an error as below.

Encountered error trying to write 1 event(s): Failed to locate column ‘IoTHub’ at position 6 in the output event

Stream analytics query error

If there are any errors, you can see that in the Output details.

Run the Stream Analytics Job and See the Data in the Database

As we have already done the initial set up, we can now start our Stream Analytics Job, please make sure that the IoT Hub is running and the device is sending data to the IoT Hub. If everything is working as expected, you will be able to see the data in the SQL server database. You can either connect your MXChip device to the network and test this or use the custom simulator app.

If you are using the Simulator console application, make sure that you are giving the device id, key and the IoT hub uri correctly, otherwise you will get an unauthorized error as explained here.

Test the Stream Analytics Job Inside the Portal

You also have an option to test the functionality in the portal itself. The only thing you will have to do is to prepare the sample input data. I have prepared the sample JSON data as follows.

[

{

"deviceId": "test-device",

"humidity": 77.699449415178719,

"pointInfo": "This is a normal message.",

"temperature": 32.506656929620846

},

{

"deviceId": "test-device",

"temperature": 52.506656929620846,

"humidity": 17.699449415178719,

"pointInfo": "This is a normal message."

},

{

"deviceId": "test-device",

"temperature": 42.506656929620846,

"humidity": 57.699449415178719,

"pointInfo": "This is a normal message."

}

]

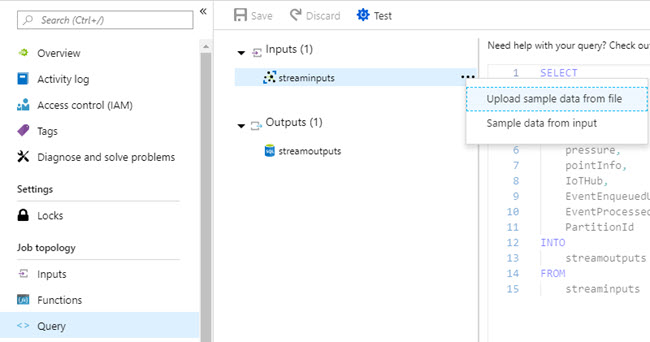

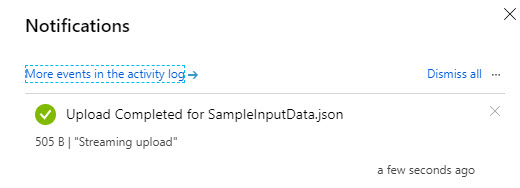

Now we can go to the Query section and upload the sample data file for our inputs.

In the next window, you can select the JSON option and upload your JSON file.

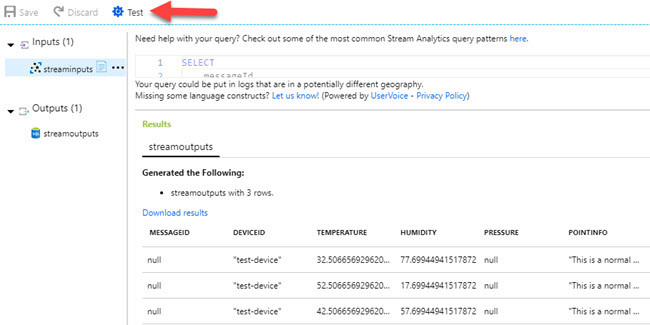

Click the Test button, and now you should be able to see the output as below.

Conclusion

Wow!. Now we have learned,

- What is Azure Stream Analytics Job

- how to create Azure Stream Analytics Job

- how to add Inputs to the Azure Stream Analytics

- how to add Outputs to the Azure Stream Analytics

- how to add custom Query in Azure Stream Analytics

- how to Test the Stream Analytics Query with sample data

You can always ready my IoT articles here.

Your turn. What do you think?

Thanks a lot for reading. Did I miss anything that you may think which is needed in this article? Could you find this post as useful? Kindly do not forget to share me your feedback.

Kindest Regards

Sibeesh Venu